Are You Making These Deepseek Errors?

페이지 정보

작성자 Carrie 작성일 25-02-17 23:28 조회 10 댓글 0본문

Unlike DeepSeek Coder and other fashions, it was released in July 2024, having a 236 billion-parameter model. Moreover, having multilingual help, it will possibly translate languages, summarize texts, and understand feelings within the prompts using sentimental analysis. It is designed to handle a wide range of duties whereas having 671 billion parameters with a context size of 128,000. Moreover, this model is pre-trained on 14.Eight trillion numerous and high-high quality tokens, followed by Supervised Fine-Tuning and Reinforcement Learning phases. However, relating to automation, it might handle repetitive tasks like knowledge entry and customer assist. DeepSeek uses advanced machine studying fashions to process information and generate responses, making it capable of handling varied tasks. Analysis and abstract of documents: It is possible to attach recordsdata, equivalent to PDFs, and ask to extract key info or answer questions related to the content. There can also be no need for bank card or payment data to enroll or access the app’s instruments. This makes it attainable to ship highly effective AI options at a fraction of the fee, opening the door for startups, developers, and companies of all sizes to entry chopping-edge AI. But it’s additionally possible that these innovations are holding DeepSeek’s models again from being truly aggressive with o1/4o/Sonnet (let alone o3).

The benchmarks are fairly impressive, but in my opinion they actually only show that DeepSeek-R1 is certainly a reasoning mannequin (i.e. the additional compute it’s spending at check time is definitely making it smarter). Likewise, if you buy a million tokens of V3, it’s about 25 cents, compared to $2.50 for 4o. Doesn’t that imply that the DeepSeek fashions are an order of magnitude more efficient to run than OpenAI’s? For o1, it’s about $60. It’s also unclear to me that DeepSeek-V3 is as sturdy as these models. If o1 was a lot more expensive, it’s probably as a result of it relied on SFT over a big volume of synthetic reasoning traces, or because it used RL with a mannequin-as-judge. While developing DeepSeek, the agency focused on creating open-source giant language fashions that improve search accuracy. It recently unveiled Janus Pro, an AI-based textual content-to-picture generator that competes head-on with OpenAI’s DALL-E and Stability’s Stable Diffusion fashions. Developed by a Hangzhou-based mostly startup, the newest DeepSeek product was released on January 20 and stripped OpenAI’s ChatGPT of its title as the most well-liked program on Apple’s App Store within days.

The benchmarks are fairly impressive, but in my opinion they actually only show that DeepSeek-R1 is certainly a reasoning mannequin (i.e. the additional compute it’s spending at check time is definitely making it smarter). Likewise, if you buy a million tokens of V3, it’s about 25 cents, compared to $2.50 for 4o. Doesn’t that imply that the DeepSeek fashions are an order of magnitude more efficient to run than OpenAI’s? For o1, it’s about $60. It’s also unclear to me that DeepSeek-V3 is as sturdy as these models. If o1 was a lot more expensive, it’s probably as a result of it relied on SFT over a big volume of synthetic reasoning traces, or because it used RL with a mannequin-as-judge. While developing DeepSeek, the agency focused on creating open-source giant language fashions that improve search accuracy. It recently unveiled Janus Pro, an AI-based textual content-to-picture generator that competes head-on with OpenAI’s DALL-E and Stability’s Stable Diffusion fashions. Developed by a Hangzhou-based mostly startup, the newest DeepSeek product was released on January 20 and stripped OpenAI’s ChatGPT of its title as the most well-liked program on Apple’s App Store within days.

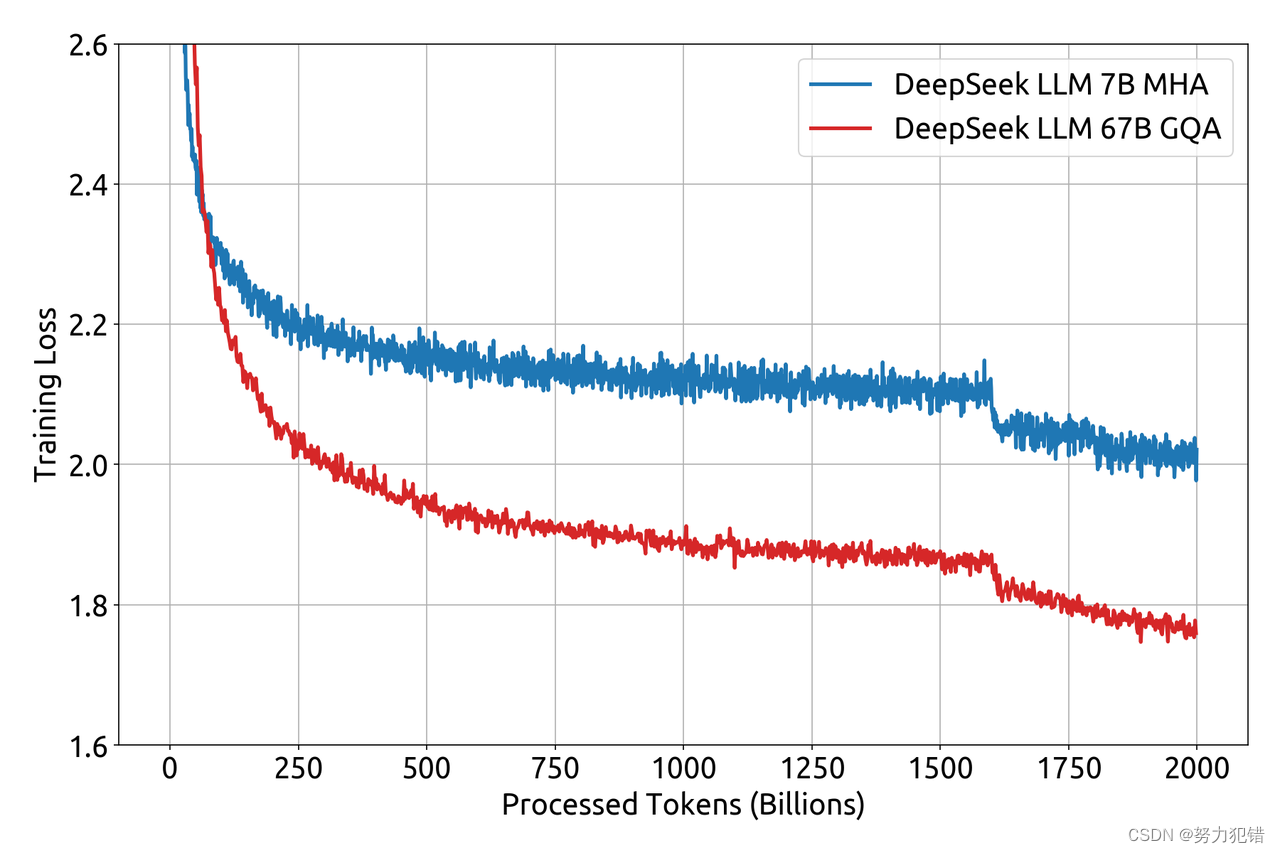

China’s new DeepSeek AI app has taken social media by storm, becoming one in all the most well-liked meme characters on X since its launch final week. The DeepSeek iOS app globally disables App Transport Security (ATS) which is an iOS platform level safety that prevents delicate knowledge from being sent over unencrypted channels. To search out out, we queried four Chinese chatbots on political questions and compared their responses on Hugging Face - an open-supply platform the place developers can add models which are subject to less censorship-and their Chinese platforms the place CAC censorship applies more strictly. With its accelerated developments in expertise, this platform has hit a 10 million user mark within 20 days. 1 Why not simply spend 100 million or more on a training run, if you have the money? By incorporating 20 million Chinese multiple-selection questions, DeepSeek LLM 7B Chat demonstrates improved scores in MMLU, C-Eval, and CMMLU. Hence, proper now, this mannequin has its variations of DeepSeek LLM 7B/67B Base and Free DeepSeek LLM 7B/67B Chat open source for the research group. Hence, it enhances the search engine experience by understanding the context and intent behind each question.

Just paste the equation, kind "Solve this equation and explain each step," and it'll resolve equations step-by-step and explain the reasoning behind each move. The selection between DeepSeek and ChatGPT will rely on your needs. DeepSeek may show that turning off access to a key technology doesn’t essentially mean the United States will win. For instance, in healthcare settings the place fast entry to affected person information can save lives or improve therapy outcomes, professionals benefit immensely from the swift search capabilities supplied by DeepSeek. This stage supplied the biggest efficiency increase. Some models struggled to comply with by or provided incomplete code (e.g., Starcoder, CodeLlama). Free DeepSeek-Coder-V2 is an open-supply Mixture-of-Experts (MoE) code language model, which may achieve the performance of GPT4-Turbo. DeepSeek in December published a research paper accompanying the mannequin, the idea of its common app, but many questions such as total growth costs will not be answered in the doc. Many users marvel whether DeepSeek chat and OpenAI’s GPT models are the same or not. DeepSeek is a newly launched superior synthetic intelligence (AI) system that is just like OpenAI’s ChatGPT. Leveraging synthetic intelligence for numerous purposes, DeepSeek chat has a number of key functionalities that make it compelling to others.

If you loved this information and also you want to get more details about Deepseek AI Online chat i implore you to stop by our web site.

- 이전글 Seven Tips To Start out Out Building A Deepseek You Always Wanted

- 다음글 Venue Suggestions For Your Child's Next House Party

댓글목록 0

등록된 댓글이 없습니다.