The Key To Deepseek

페이지 정보

작성자 Elvis 작성일 25-02-03 16:34 조회 37 댓글 0본문

Chinese AI startup DeepSeek launches deepseek ai china-V3, a large 671-billion parameter mannequin, shattering benchmarks and rivaling top proprietary methods. Its performance in benchmarks and third-celebration evaluations positions it as a powerful competitor to proprietary fashions. Qwen 2.5 72B can be in all probability nonetheless underrated primarily based on these evaluations. While encouraging, there is still much room for enchancment. However, there are a few potential limitations and areas for additional analysis that may very well be thought of. There may be extra knowledge than we ever forecast, they informed us. By leveraging a vast quantity of math-associated web information and introducing a novel optimization method known as Group Relative Policy Optimization (GRPO), the researchers have achieved impressive results on the challenging MATH benchmark. Researchers with Align to Innovate, the Francis Crick Institute, Future House, and the University of Oxford have built a dataset to test how effectively language fashions can write biological protocols - "accurate step-by-step directions on how to finish an experiment to accomplish a specific goal".

Chinese AI startup DeepSeek launches deepseek ai china-V3, a large 671-billion parameter mannequin, shattering benchmarks and rivaling top proprietary methods. Its performance in benchmarks and third-celebration evaluations positions it as a powerful competitor to proprietary fashions. Qwen 2.5 72B can be in all probability nonetheless underrated primarily based on these evaluations. While encouraging, there is still much room for enchancment. However, there are a few potential limitations and areas for additional analysis that may very well be thought of. There may be extra knowledge than we ever forecast, they informed us. By leveraging a vast quantity of math-associated web information and introducing a novel optimization method known as Group Relative Policy Optimization (GRPO), the researchers have achieved impressive results on the challenging MATH benchmark. Researchers with Align to Innovate, the Francis Crick Institute, Future House, and the University of Oxford have built a dataset to test how effectively language fashions can write biological protocols - "accurate step-by-step directions on how to finish an experiment to accomplish a specific goal".

DeepSeekMath 7B achieves spectacular performance on the competition-level MATH benchmark, approaching the extent of state-of-the-art models like Gemini-Ultra and GPT-4. These fashions are higher at math questions and questions that require deeper thought, so they normally take longer to reply, however they are going to present their reasoning in a extra accessible vogue. Furthermore, the researchers show that leveraging the self-consistency of the mannequin's outputs over 64 samples can further improve the performance, reaching a score of 60.9% on the MATH benchmark. To handle this problem, the researchers behind DeepSeekMath 7B took two key steps. The paper attributes the strong mathematical reasoning capabilities of DeepSeekMath 7B to two key factors: the intensive math-related knowledge used for pre-coaching and the introduction of the GRPO optimization approach. The paper presents a compelling method to bettering the mathematical reasoning capabilities of giant language models, and the outcomes achieved by DeepSeekMath 7B are impressive. Some fashions generated pretty good and others horrible results. We are actively engaged on extra optimizations to completely reproduce the results from the DeepSeek paper. The DeepSeek MLA optimizations had been contributed by Ke Bao and Yineng Zhang.

DeepSeekMath 7B achieves spectacular performance on the competition-level MATH benchmark, approaching the extent of state-of-the-art models like Gemini-Ultra and GPT-4. These fashions are higher at math questions and questions that require deeper thought, so they normally take longer to reply, however they are going to present their reasoning in a extra accessible vogue. Furthermore, the researchers show that leveraging the self-consistency of the mannequin's outputs over 64 samples can further improve the performance, reaching a score of 60.9% on the MATH benchmark. To handle this problem, the researchers behind DeepSeekMath 7B took two key steps. The paper attributes the strong mathematical reasoning capabilities of DeepSeekMath 7B to two key factors: the intensive math-related knowledge used for pre-coaching and the introduction of the GRPO optimization approach. The paper presents a compelling method to bettering the mathematical reasoning capabilities of giant language models, and the outcomes achieved by DeepSeekMath 7B are impressive. Some fashions generated pretty good and others horrible results. We are actively engaged on extra optimizations to completely reproduce the results from the DeepSeek paper. The DeepSeek MLA optimizations had been contributed by Ke Bao and Yineng Zhang.

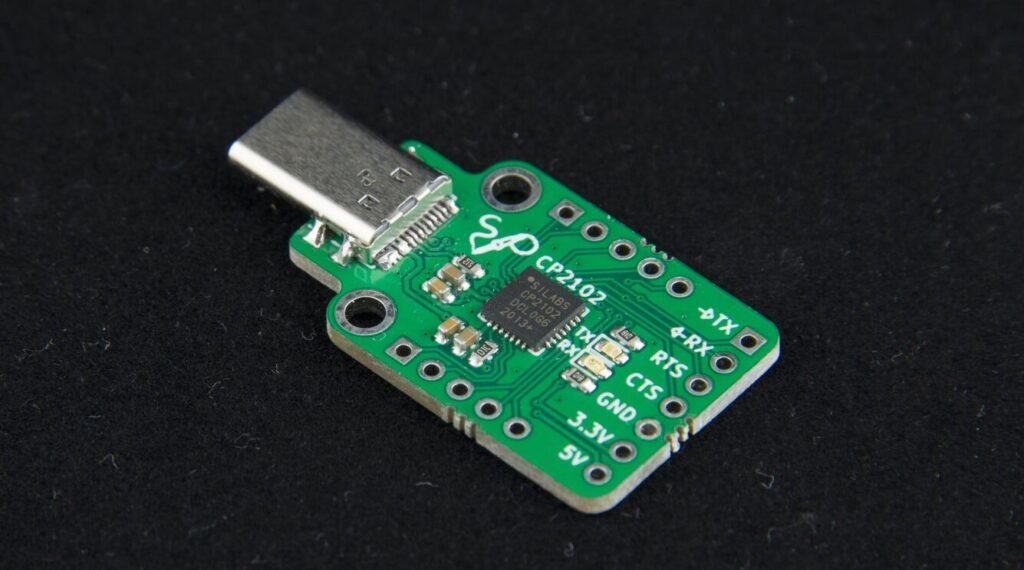

Multi-head Latent Attention (MLA) is a brand new consideration variant launched by the DeepSeek crew to improve inference effectivity. LLM v0.6.6 helps DeepSeek-V3 inference for FP8 and BF16 modes on both NVIDIA and AMD GPUs. In this article, we will discover how to use a reducing-edge LLM hosted on your machine to attach it to VSCode for a powerful free deepseek self-hosted Copilot or Cursor expertise with out sharing any info with third-party providers. To make use of Ollama and Continue as a Copilot alternative, we will create a Golang CLI app. While it responds to a immediate, use a command like btop to check if the GPU is getting used efficiently. 1 earlier than the obtain command. This allowed the model to study a deep seek understanding of mathematical ideas and problem-fixing strategies. By spearheading the release of those state-of-the-art open-source LLMs, DeepSeek AI has marked a pivotal milestone in language understanding and AI accessibility, fostering innovation and broader applications in the field. Lean is a useful programming language and interactive theorem prover designed to formalize mathematical proofs and confirm their correctness. Common practice in language modeling laboratories is to make use of scaling legal guidelines to de-danger ideas for pretraining, so that you spend little or no time coaching at the most important sizes that do not end in working models.

We turn on torch.compile for batch sizes 1 to 32, where we noticed essentially the most acceleration. We are actively collaborating with the torch.compile and torchao teams to incorporate their latest optimizations into SGLang. The torch.compile optimizations had been contributed by Liangsheng Yin. In SGLang v0.3, we implemented varied optimizations for MLA, together with weight absorption, grouped decoding kernels, FP8 batched MatMul, and FP8 KV cache quantization. It outperforms its predecessors in a number of benchmarks, together with AlpacaEval 2.0 (50.5 accuracy), ArenaHard (76.2 accuracy), and HumanEval Python (89 score). When the mannequin's self-consistency is taken under consideration, the rating rises to 60.9%, additional demonstrating its mathematical prowess. A extra granular analysis of the mannequin's strengths and weaknesses may assist establish areas for future enhancements. Furthermore, the paper doesn't talk about the computational and resource necessities of training DeepSeekMath 7B, which could be a essential issue in the mannequin's real-world deployability and scalability. The paper introduces DeepSeekMath 7B, a big language model that has been pre-trained on an enormous amount of math-related knowledge from Common Crawl, totaling one hundred twenty billion tokens. The paper introduces DeepSeekMath 7B, a big language mannequin skilled on an enormous quantity of math-associated data to enhance its mathematical reasoning capabilities. The paper introduces DeepSeekMath 7B, a large language model that has been particularly designed and educated to excel at mathematical reasoning.

If you are you looking for more on ديب سيك مجانا take a look at the website.

댓글목록 0

등록된 댓글이 없습니다.