Eight Tips With Deepseek

페이지 정보

작성자 Simone 작성일 25-02-01 01:12 조회 9 댓글 0본문

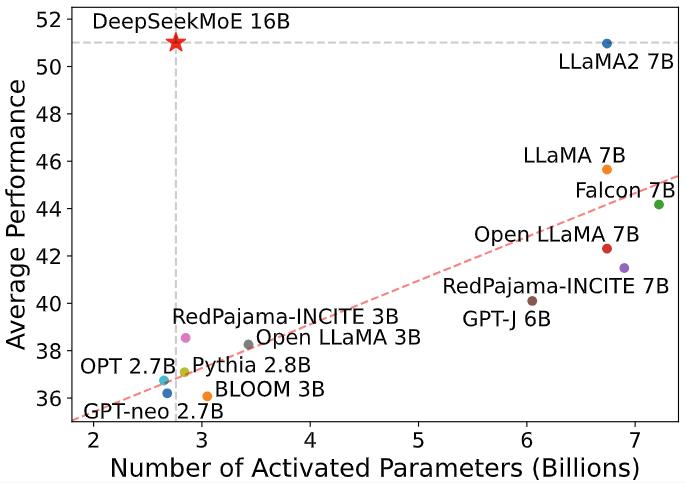

Forbes - topping the company’s (and stock market’s) previous document for shedding cash which was set in September 2024 and valued at $279 billion. Base Models: 7 billion parameters and 67 billion parameters, focusing on normal language duties. 1. The bottom models were initialized from corresponding intermediate checkpoints after pretraining on 4.2T tokens (not the model at the tip of pretraining), then pretrained further for 6T tokens, then context-prolonged to 128K context size. Pretrained on 8.1 trillion tokens with the next proportion of Chinese tokens. Initializes from previously pretrained DeepSeek-Coder-Base. DeepSeek-Coder Base: Pre-educated fashions aimed at coding tasks. Besides, we attempt to prepare the pretraining information at the repository level to boost the pre-educated model’s understanding capability throughout the context of cross-files inside a repository They do this, by doing a topological kind on the dependent recordsdata and appending them into the context window of the LLM. But beneath all of this I have a way of lurking horror - AI systems have obtained so useful that the thing that will set humans other than each other shouldn't be specific arduous-won skills for utilizing AI programs, however moderately just having a excessive level of curiosity and agency. We introduce an innovative methodology to distill reasoning capabilities from the long-Chain-of-Thought (CoT) model, particularly from one of the DeepSeek R1 series models, into commonplace LLMs, notably free deepseek-V3.

Forbes - topping the company’s (and stock market’s) previous document for shedding cash which was set in September 2024 and valued at $279 billion. Base Models: 7 billion parameters and 67 billion parameters, focusing on normal language duties. 1. The bottom models were initialized from corresponding intermediate checkpoints after pretraining on 4.2T tokens (not the model at the tip of pretraining), then pretrained further for 6T tokens, then context-prolonged to 128K context size. Pretrained on 8.1 trillion tokens with the next proportion of Chinese tokens. Initializes from previously pretrained DeepSeek-Coder-Base. DeepSeek-Coder Base: Pre-educated fashions aimed at coding tasks. Besides, we attempt to prepare the pretraining information at the repository level to boost the pre-educated model’s understanding capability throughout the context of cross-files inside a repository They do this, by doing a topological kind on the dependent recordsdata and appending them into the context window of the LLM. But beneath all of this I have a way of lurking horror - AI systems have obtained so useful that the thing that will set humans other than each other shouldn't be specific arduous-won skills for utilizing AI programs, however moderately just having a excessive level of curiosity and agency. We introduce an innovative methodology to distill reasoning capabilities from the long-Chain-of-Thought (CoT) model, particularly from one of the DeepSeek R1 series models, into commonplace LLMs, notably free deepseek-V3.

Much of the ahead cross was performed in 8-bit floating point numbers (5E2M: 5-bit exponent and 2-bit mantissa) fairly than the standard 32-bit, requiring special GEMM routines to accumulate accurately. In AI there’s this idea of a ‘capability overhang’, which is the concept that the AI systems which we've around us at this time are a lot, way more capable than we understand. That makes sense. It's getting messier-a lot abstractions. Now, getting AI methods to do helpful stuff for you is as simple as asking for it - and also you don’t even have to be that exact. If we get it mistaken, we’re going to be dealing with inequality on steroids - a small caste of individuals can be getting an enormous quantity completed, aided by ghostly superintelligences that work on their behalf, while a larger set of people watch the success of others and ask ‘why not me? While human oversight and instruction will stay crucial, the flexibility to generate code, automate workflows, and streamline processes promises to accelerate product improvement and innovation. If we get this right, everybody will be in a position to achieve extra and train extra of their very own company over their very own intellectual world.

Perhaps extra importantly, distributed training seems to me to make many issues in AI policy tougher to do. In addition, per-token likelihood distributions from the RL coverage are in comparison with the ones from the initial model to compute a penalty on the distinction between them. So it’s not massively shocking that Rebus appears very exhausting for today’s AI systems - even the most powerful publicly disclosed proprietary ones. Solving for scalable multi-agent collaborative methods can unlock many potential in building AI functions. This progressive strategy has the potential to drastically speed up progress in fields that depend on theorem proving, reminiscent of arithmetic, laptop science, and beyond. In addition to employing the following token prediction loss during pre-coaching, we've additionally incorporated the Fill-In-Middle (FIM) method. Therefore, we strongly advocate employing CoT prompting strategies when using DeepSeek-Coder-Instruct fashions for complex coding challenges. Our analysis signifies that the implementation of Chain-of-Thought (CoT) prompting notably enhances the capabilities of DeepSeek-Coder-Instruct fashions.

When you adored this article along with you would like to get more info relating to ديب سيك kindly visit our web-page.

댓글목록 0

등록된 댓글이 없습니다.